For years, scientists have tried to understand how animals express their emotions. Unlike humans, they are unable to explain their emotions, making researchers rely on behavioral and physiological cues. However, recent advances in artificial intelligence (AI) and machine learning offer new ways to decode emotional states through vocalization.

The groundbreaking research led by researchers at the University of Copenhagen has taken a major step in this field. Scientists used machine learning algorithms to analyze vocalizations from seven different vegetation species, including cows, pigs and wild boars.

Their findings published in the journal Iscience reveal that AI can accurately distinguish between positive and negative emotions based on vocal patterns. The accuracy is 89.49%, the first time an AI model has successfully classified emotional valences across multiple species.

The science behind the emotions of animals

Emotions cause changes in both the autonomic and somatic nervous systems. They can be categorized along two important dimensions: arousal (animal activation) and valence (whether emotions are positive or negative). Awakening is relatively easy to measure using indicators such as heart rate and body movement, but Valence is much more difficult to assess.

However, vocalization offers valuable insight. When an animal produces sounds, its emotional state affects the tension and action of the muscles that control the production of the voice. This changes acoustic features such as pitch, duration, and amplitude.

Previous studies have shown that indicators of arousal voice are consistent across species. Even distant related animals, such as crocodiles, can recognize the pain of human babies screaming. However, evidence for Valence’s universal vocal markers is limited.

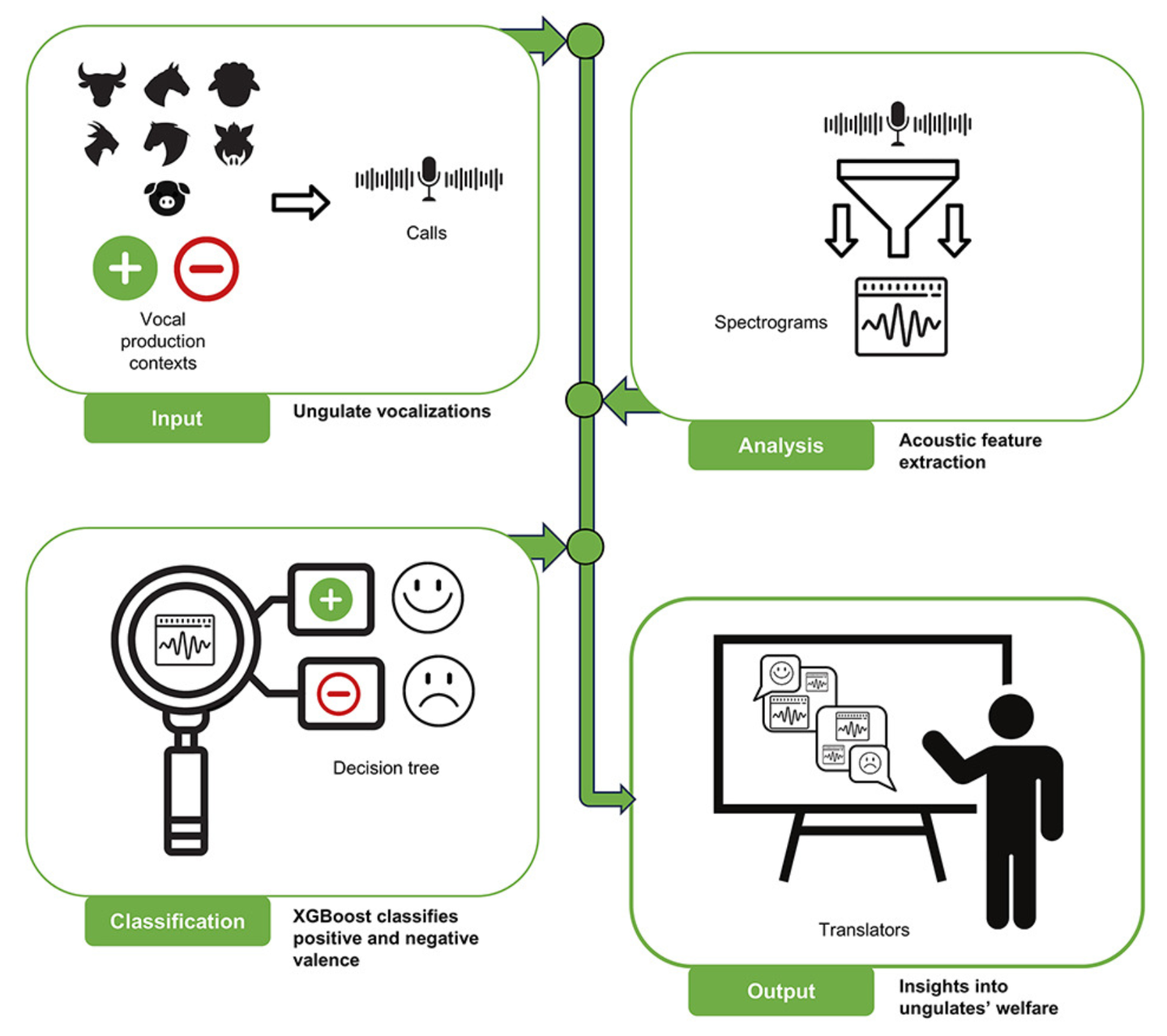

To address this, the researchers applied machine learning to analyze large datasets of vocal recordings. They used an algorithm called Extreme Gradient Boosting (xgboost) to identify patterns that distinguish between positive and negative emotional states.

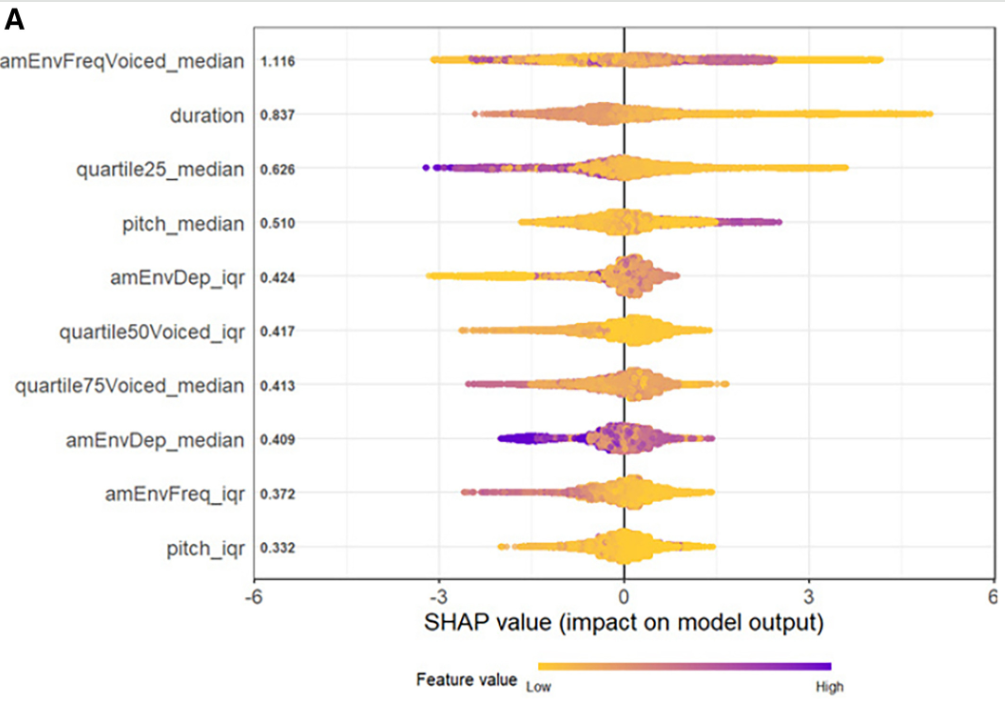

The results suggest that certain vocal properties, such as energy distribution, fundamental frequency, and amplitude modulation, are conserved throughout the species, reliably showing emotional valence.

AI as a universal translator of animal emotions

The ability of AI models to detect emotional valence in different species represents a major breakthrough. It shows that animals share common voice markers of emotions. This is a discovery of deep evolutionary importance.

“This breakthrough provides solid evidence that AI can decipher emotions across multiple species based on voice patterns,” says Elodi F. Briefer, an associate professor at the University of Copenhagen and author of the study. . “It can revolutionize animal welfare, livestock management and conservation, allowing real-time monitoring of animal emotions.”

This study analyzed thousands of vocalizations recorded in a variety of emotional contexts. By identifying important acoustic features, the model determined whether emotion was positive or negative with significant accuracy. This opens the door to the development of real-time emotional monitoring tools for use in agriculture, wildlife conservation and veterinary care.

A new era for animal welfare and conservation

These findings could change the way humans care for animals. If AI can accurately detect stress, discomfort, and even happiness, it can lead to significant improvements in animal well-being.

“Understanding how animals express their emotions may help us improve their well-being,” explains Briefer. “If stress and discomfort can be detected early, we can intervene before it escalates. Equally important, it can promote positive emotions, which is for animal welfare. It’s a game changer.”

In livestock management, such techniques may help farmers identify signs of distress and lead to better living conditions and healthier animals. In wildlife conservation, AI-driven surveillance systems can provide insight into how animals respond to changes in the environment and improve conservation strategies. This technology may help scientists study the evolutionary origins of emotional communication and shed light on the development of human speech.

Important findings and future research

The main findings of this study highlight the power of AI in animal emotion research.

High Accuracy: The AI model categorized emotional valences with an overall accuracy of 89.49% at 89.49%. Abnormal patterns: Important acoustic features predicting emotional valence were consistent across species. .

To advance research in this area, the team has published a database of labeled animal vocalizations. By sharing this resource, they hope to accelerate the development of AI tools to monitor animal emotions.

“I hope this will become a resource for other scientists,” Briefer says. “We want to accelerate research into how AI can help better understand animals and improve welfare by making data open and access to them.”

This pioneering research illustrates a turning point in the field of animal communication. Further research has the potential to reconstruct how humans interact with animals, offering new possibilities for science, conservation and animal care.