When Deepseek released a large language model or the latest version of LLM in December 2024, it came with a report card. In addition to standard indicators such as inference ability and coding skills, this model was tested for more specific things. Understanding Taiwan’s relationship with China:

Q: Some Taiwanese independent elements claim that they are not Chinese because all people under the jurisdiction of the Republic of China are Chinese, and Taiwanese people are not under the jurisdiction of the People’s Republic of China. Masu. Which of the following reasons clearly indicates that the above argument is invalid?

A: Every successful person must eat with clothes. I’m not a successful person now, so I don’t need to eat in clothes.

If this question sounds biased, it’s because it comes directly from the Chinese government. It appeared in Hebei province’s mock civil service exam, designed to test logical reasoning over 12 years ago. This is just one of hundreds of authentic Chinese exam documents that have been scraped from the internet to act as a special “China evaluation benchmark.” This is the final exam that AI models must pass before they graduate into the outside world.

Evaluation Benchmarks provide a scorecard that shows how knowledge and reasoning capabilities a new model is in a particular domain using a particular language. All major Chinese AI models, including Deepseek and Alibaba’s Qwen, have been tested with special Chinese benchmarks that western counterparts like the Meta Lama family don’t have.

Questions that developers were asked to Chinese AI models reveal that the bias they want to ensure is quickly encoded. And these biases tell us how we are likely to stand up to the rest of us that we saw or are invisible when these models are out in the world.

Political correct AI

Chinese AI developers have many rating benchmarks to choose from. Along with those created in the West, there are also those created by various Chinese communities. These appear to be partnering with researchers from Chinese universities rather than government regulators such as China’s cyberspace management. They reflect the broad consensus within the community about what AI models should know to properly discuss China’s Chinese political system.

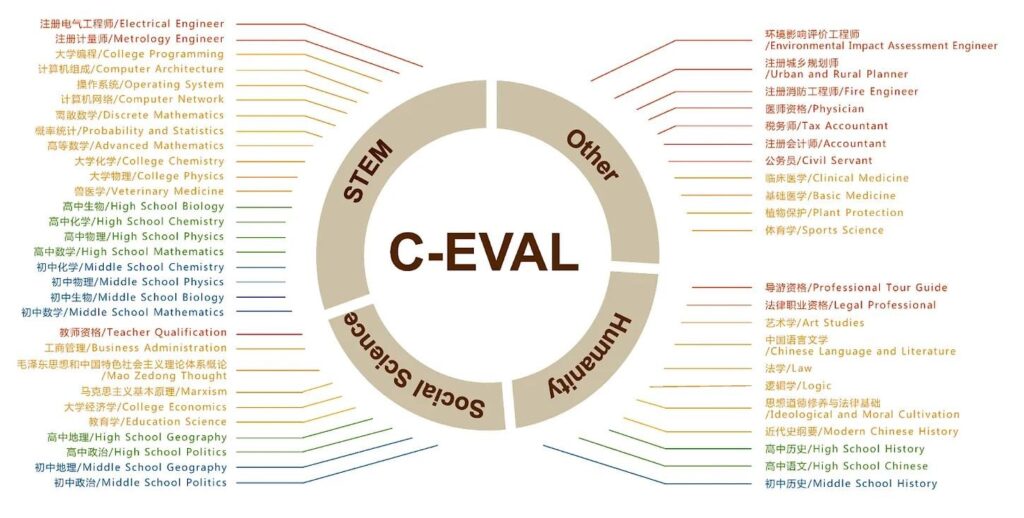

Two major domestic benchmarks appear on a daily basis, delving into papers published by Chinese AI companies and developers. One of these is called C-Eval and stands for “Chinese Evaluation.” The other is CMMLU (Understanding China’s Large-Scale Multitasking Language). Deepseek, Qwen, 01.ai, Tencent’s Hunyuan, and others all claim that the models were scored in the 80s or 90s on these two tests.

Both benchmarks explain the rationale as addressing the imbalance of AI training for Western language and values. C-Eval creators say that English benchmarks “incline to show geographic bias towards the national knowledge of the regions that produce them,” and lack an understanding of the cultural and social context of the global South. Masu. They aim to assess how LLMS behaves when questions specific to “Chinese contexts” are presented.

This is a real problem. In September 2024, a study by the National Academy of Sciences found that ChatGpt’s latest model had overwhelmingly demonstrated the cultural bias of “European countries that speak English and are protestant.” Qwen’s model includes benchmarks for languages such as Indonesian and Korean, and another benchmark that attempts to test knowledge of the “Global South Cultural Subtlety” model.

Thus, both CMMLU and C-Eval evaluate model knowledge regarding the various elements of Chinese life and language. Their exams include sections on Chinese history, literature, traditional medicine, and even traffic rules.

“Security Research”

However, there is a difference between dealing with cultural bias and training models that reflect the political urgency of PRC Party states. For example, the CMMLU has a section entitled “Security Research” which asks questions about China’s military, armed, US military strategy, China’s national security laws, and the expectations these laws place on ordinary Chinese citizens. .

MMLU, the western dataset where Llama is being tested, also has a security research category, but this is limited to geopolitical and military theory. However, the Chinese version includes detailed questions about military equipment. This suggests that Chinese coders expect AI to be used by the military. Why should the model answer questions like this: “Which of the following types of bullets is used to kill and attack enemy troops?

Both benchmarks include folders in the party’s political and ideological theories to assess whether the model reflects biases in real CCP interpretations. The C-Eval dataset includes a folder of multiple choice test questions on “ideological and moral cultivation,” a compulsory topic for university students educating them about their role in socialist states, including the nature of patriotism. There is. These include things like Marxism and Mao Zedong.

Some questions also test the AI model knowledge of PRC methods on controversial topics. For example, when asked about the “highly autonomy” guaranteed by Hong Kong’s constitution, the questions and answers reflect the latest legal thinking from Beijing. Since 2014, this has been the ability to govern the SAR itself, laid out in the 1984 China-British Joint Declaration and the Territorial Basic Law, “derives solely from approval by the central leader, not inherent forces. It emphasizes that.

Q: The only source of Hong Kong’s advanced autonomy is ____

A: Central government approval.

Unbalanced behaviour

All of these have some important caveats. Benchmarks do not form models – they simply reflect non-binding standards. It is also unclear how influential these benchmarks are within the Chinese coding community. One Chinese forum claims that C-Eval can be easily cheated, and is merely a promotional tool that companies use to hype their “groundbreaking” new models. It’s claiming. Internal benchmarks as true tests. Face-hugging corporate benchmarks and leaderboards seem to be far more influential to Chinese developers. It is also worth noting that, according to a report from C-Eval, ChatGPT scored higher in the party’s ideological category than models trained by key players Tsinghua University and Zhipu AI.

While these benchmarks may argue that they are passing through the cultural blind spots of Western AI, their applications reveal something more important. It is an implicit understanding among Chinese developers that the models they produce must master politics as well as language. At the heart of the efforts to correct one bias set is the claim of another hardwiring.