Please read the arxiv → paper

The AI Now Institute has released new reports, security joint selection, and national security breach. Self-fulfilling predictions of weakening AI risk thresholds are now leading today’s AI safety efforts, led primarily by industry engineers, weaken long-term safety protocols and undermine US security.

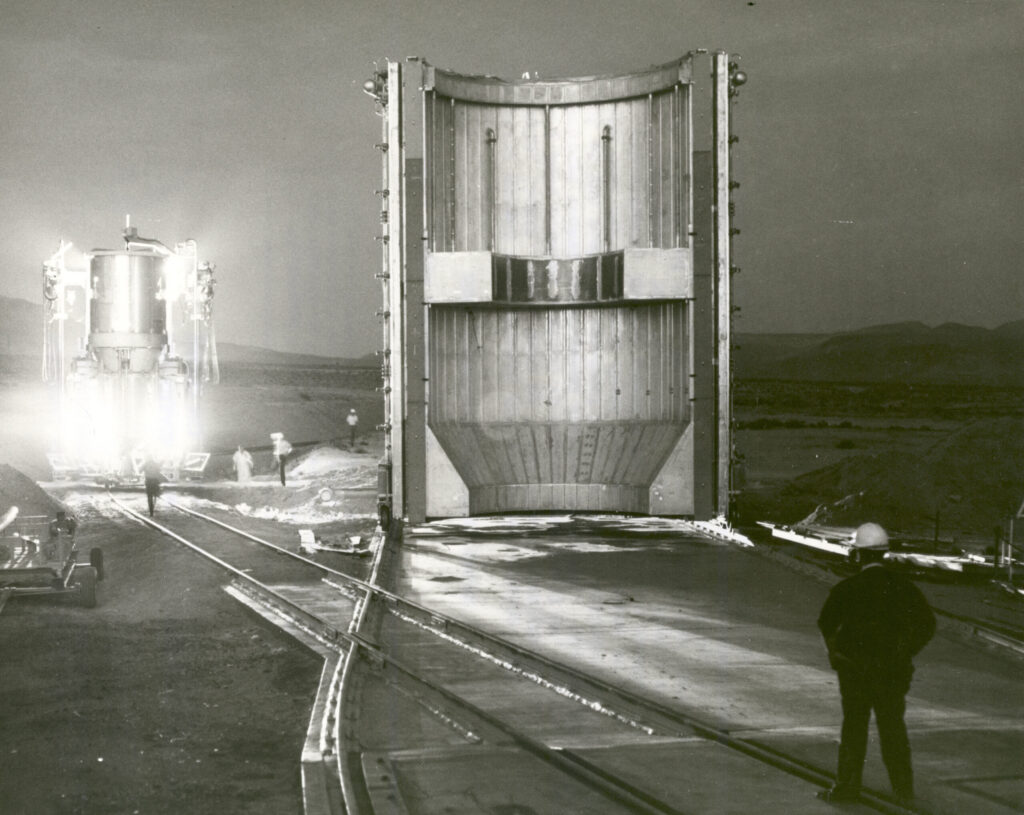

This report examines the unfounded AI military narrative and speculative concerns about “existential risks” are used to justify the accelerated deployment of military AI systems, in conflict with safety and reliability standards that have historically governed other high-risk technologies, such as nuclear systems. The result is normalization of AI systems that are untested, unreliable, and proactively erode the security and capabilities of defence and civil-critical infrastructure.

“There is a militaristic drive to adopt AI, led primarily by AI labs, and engineers are making life and death decisions in the hands of people with little public accountability,” says Heidy Khlaaf, chief scientist of AI Scientists at the AI Now Institute. “We see the erosion of a proven assessment approach in favor of vague claims of ability not meeting the most basic safety thresholds.”

Security revisionism and its impact on national security

This report brings together lessons from the first established risk framework for managing nuclear systems during the Cold War era. These frameworks provided invaluable safety and reliability goals and helped the United States establish technical advantages and defensive capabilities against its enemies.

Rather than preserving the strict safety and assessment processes essential to national security, AI engineers stubbornly argued for justification of evil cost benefits for accelerated AI adoption at the expense of lower safety and security thresholds. They sought to replace the traditional safety framework with an unclear “competence” or “alignment” counterpart that deviates from established military standards. This “safety revisionism” could really undermine the capabilities of the US military and technology against China or other enemies.

Of course the right agenda

The report reestablishes democratic surveillance for policymakers, defense officials and global governance bodies, ensuring that AI, where security is deployed critically or militarily, is subject to the same strict, context-specific standards that responsible technology adoption has long been defined. “Competency Assessment” and “Red Teaming” are weak alternatives to existing TEVV frameworks that help assess fitness for system objectives, in line with strategic and tactical defensive goals.

The fatal and geopolitical consequences of AI within military applications poses very realistic and existential risks. “How safe and secure is it?” asks the report. Until society is answered by society, not just engineers, we risk the erosion of safety, safety and trust in AI systems embedded in our most important institutions.