![]()

NVIDIA today released Cosmos Reason 2, the latest advancement in open inference vision language models for physical AI. Cosmos Reason 2 outperforms previous versions in accuracy and tops the Physical AI Bench and Physical Reasoning leaderboards as the #1 open model for visual understanding.

NVIDIA Cosmos Reason 2: Physical AI Vision Language Model Inference

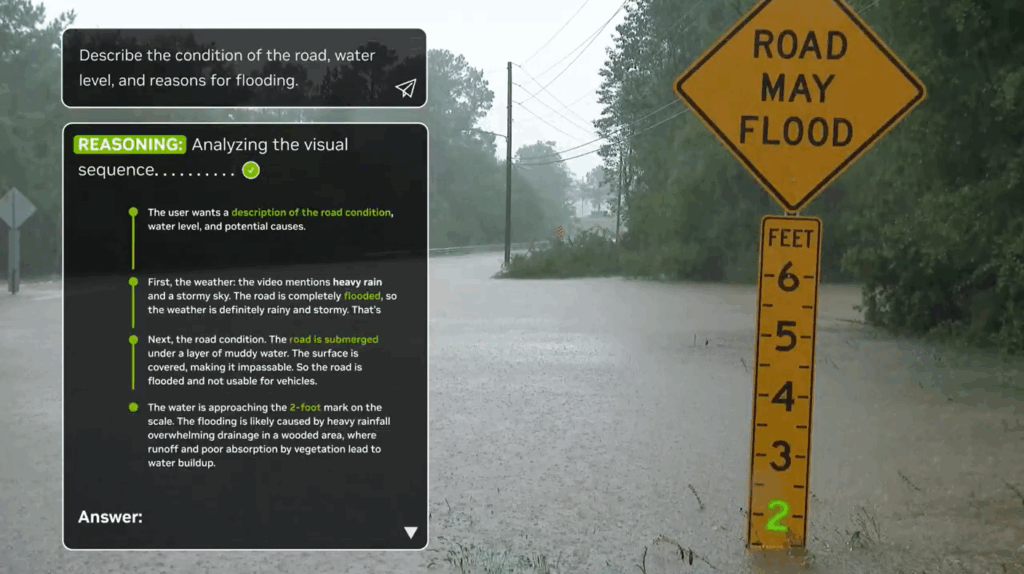

Since their introduction, visual language models have rapidly improved in tasks such as object and pattern recognition in images. But they still struggle with tasks that humans consider natural, such as planning several steps ahead, dealing with uncertainty, and adapting to new situations. Cosmos Reason is designed to bridge this gap by giving robots and AI agents stronger common sense and reasoning to solve complex problems step by step.

Cosmos Reason 2 is a state-of-the-art open reasoning vision language model (VLM) that enables robots and AI agents to see, understand, plan, and act on the physical world as humans do. Use common sense, physics, and prior knowledge to recognize how objects move through space and time to handle complex tasks, adapt to new situations, and find ways to solve problems step by step.

✨ Key highlights

Improved spatio-temporal understanding and timestamp accuracy.

Optimize performance with flexible edge-to-cloud deployment options with 2B and 8B parameter model sizes.

Support for an expanded set of spatial understanding and visual recognition features – 2D/3D point localization, bounding box coordinates, trajectory data, OCR support.

256K input tokens, up from 16K in Cosmos Reason 1, for better understanding of long contexts.

Easy-to-use Cosmos Cookbook recipes adapt to multiple use cases.

🤖 Common use cases

Video Analytics AI Agents – These agents can extract valuable insights from large amounts of video data to optimize processes. Cosmos Reason 2 builds on the features of Cosmos Reason 1 and now offers OCR support, 2D/3D point localization, and a suite of mark understanding.

Developers can quickly start developing video analytics AI agents using the NVIDIA Blueprint for Video Search and Summarization (VSS) that uses Cosmos Reason as a VLM.

Salesforce is transforming workplace safety and compliance by analyzing video footage captured by Cobalt robots with a VSS blueprint that uses Agentforce and Cosmos Reason as the VLM.

Data annotation and critique — Enables developers to automate high-quality annotation and critique of large and diverse training datasets. Cosmos Reason provides time stamps and detailed descriptions of real or synthetically generated training videos.

Uber is exploring Cosmos Reason 2 to provide accurate, searchable video captions for self-driving vehicle (AV) training data to help efficiently identify critical driving scenarios. This co-authored recipe for AV video captioning and VQA shows you how to fine-tune and evaluate Cosmos Reason 2-8B with annotated AV video. Visible improvements were achieved across multiple metrics. BLEU score improved by 10.6% (0.113 → 0.125), MCQ-based VQA improved by 0.67 percentage points (80.18% → 80.85%), and LingoQA improved by 13.8% (63.2% → 77.0%). These advantages indicate an effective domain adaptation for AV applications.

Robot planning and reasoning — Serve as the brain for purposeful and systematic decision-making with the robot’s vision-language-action (VLA) model. In addition to determining the next step, Cosmos Reason 2 now also provides orbital coordinates.

Encord provides native support for Cosmos Reason 2 in its Data Agent library and AI data platform, allowing developers to leverage Cosmos Reason 2 as a VLA for robotics and other physical AI use cases.

Companies like Hitachi, Milestone, and VAST Data are using Cosmos Reason to power robotics, autonomous driving, and video analytics AI agents for transportation and workplace safety.

Try Cosmos Reason 2 at build.nvidia.com and experience the latest features including sample prompts for generating bounding boxes and robot trajectories. Upload your own videos and images for further analysis.

Download Cosmos Reason 2 models (2B and 8B) with Hugging Face or use Cosmos Reason 2 in the cloud. This model will be available soon on Amazon Web Services, Google Cloud, and Microsoft Azure. First, check out the Cosmos Reason 2 documentation and Cosmos Cookbook.

Other models in the Cosmos family:

🔮 Cosmos Predict 2.5

Cosmos Predict is a generative AI model that predicts the future state of the physical world as a video based on text, image, or video input.

The leader in physics AI benches for quality, accuracy, and overall consistency. Up to 30 seconds of physically and temporally consistent clips per generation. Supports multiple frame rates and resolutions. Pre-trained with 200 million clips. Available as 2B and 14B pre-training models and various 2B post-training models for multi-view, action conditioning, and autonomous vehicle training.

Check model card >>

🔁 Cosmos Transfer 2.5

Cosmos Transfer is our lightest multi-control model built for video to world style transfer.

Scale a single simulation or spatial video across different environments and lighting conditions. Immediate compliance and physical coordination improved. Use with NVIDIA Isaac Sim™ or NVIDIA Omniverse NuRec for simulation to actual transformations.

Check model card >>

🤖 NVIDIA GR00T N1.6

NVIDIA GR00T N1.6 is an open reasoning Vision Language Action (VLA) model built specifically for humanoid robots to unlock whole-body control and improve reasoning and context understanding with NVIDIA Cosmos Reason.

resource

🧑🏻🍳 Read Cosmos Cookbook → https://nvda.ws/4qevli8

📚 Explore models and datasets → https://github.com/nvidia-cosmos

⬇️ Try Cosmos models with a hosted catalog → https://nvda.ws/3Yg0Dcx

💻 Join the Cosmos community → https://discord.gg/u23rXTHSC9

🗳️ Contribute to Cosmos Cookbook → https://nvda.ws/4aQcBkk