technology

Published October 30, 2024 Author

Zaran Borsos, Matt Sharifi, Marco Tagliasacchi

Our pioneering voice generation technology is helping people around the world interact with digital assistants and AI tools in more natural, conversational and intuitive ways.

Speech is central to human relationships. It helps people around the world exchange information and ideas, express emotions, and create mutual understanding. As the technology built to produce natural, dynamic audio continues to improve, richer and more engaging digital experiences are unlocked.

Over the past few years, we have pioneered the frontiers of audio generation, developing models that can create high-quality, natural-sounding audio from a variety of inputs, including text, tempo controls, and specific audio. The technology powers single-speaker audio in many Google products and experiments, including Gemini Live, Project Astra, Journey Voices, and YouTube’s automated dubbing, and helps people around the world use more natural, conversational, and intuitive digital assistants and We help people interact with AI tools.

Google recently collaborated with partners across Google to help develop two new features that can generate long-form conversations with multiple speakers to make complex content more accessible.

NotebookLM Audio Summaries turn uploaded documents into engaging and lively conversations. With one click, two AI hosts summarize your material, make connections between topics, and interact. Illuminate creates AI-generated formal discussions of research papers, making knowledge more accessible and understandable.

Here we provide an overview of the latest speech generation research that underpins all of these products and experimental tools.

Pioneering technology for audio generation

For many years, we have invested in speech generation research, exploring new ways to generate more natural interactions in our products and experimental tools. Previous research on SoundStorm demonstrated for the first time the ability to generate 30-second segments of natural dialogue between multiple speakers.

This extends our previous work, SoundStream and AudioLM, to apply many text-based language modeling techniques to audio generation problems.

SoundStream is a neural audio codec that efficiently compresses and decompresses audio input without sacrificing quality. As part of the training process, SoundStream learns how to map audio to different acoustic tokens. These tokens capture all the information needed to reconstruct the audio with high fidelity, including characteristics such as prosody and timbre.

AudioLM treats audio generation as a language modeling task and generates acoustic tokens for codecs such as SoundStream. As a result, the AudioLM framework makes no assumptions about the type or composition of the audio produced and is flexible enough to handle a variety of sounds without requiring architectural adjustments, making it well-suited for modeling multi-speaker interactions. Masu.

Example of a multi-speaker dialog generated by NotebookLM AudioOverview based on some potato related documentation.

Based on this research, our state-of-the-art speech generation technology produces two-minute dialogues with improved naturalness, speaker consistency, and acoustic quality, given a dialogue script and speaker turn markers. You can. The model performs this task in less than 3 seconds in one inference pass on a single Tensor Processing Unit (TPU) v5e chip. This means it generates audio more than 40 times faster than real-time.

Scaling the audio generation model

Extending a single-speaker generation model to a multi-speaker model became a matter of data and model capacity. More efficient speech to compress speech into a series of tokens at speeds as low as 600 bits/second without compromising output quality, allowing modern speech generation models to generate longer speech segments. I created a codec.

The tokens generated by our codec have a hierarchical structure and are grouped by time frame. The first token in a group captures phonetic and prosodic information, and the last token encodes acoustic details.

Even with the new audio codec, over 5000 tokens need to be generated to create a 2 minute interaction. To model these long sequences, we developed a specialized Transformer architecture that can efficiently process information hierarchies that match the structure of acoustic tokens.

Using this technique, acoustic tokens corresponding to interactions can be efficiently generated within a single autoregressive inference pass. Once generated, these tokens can be decoded back into an audio waveform using an audio codec.

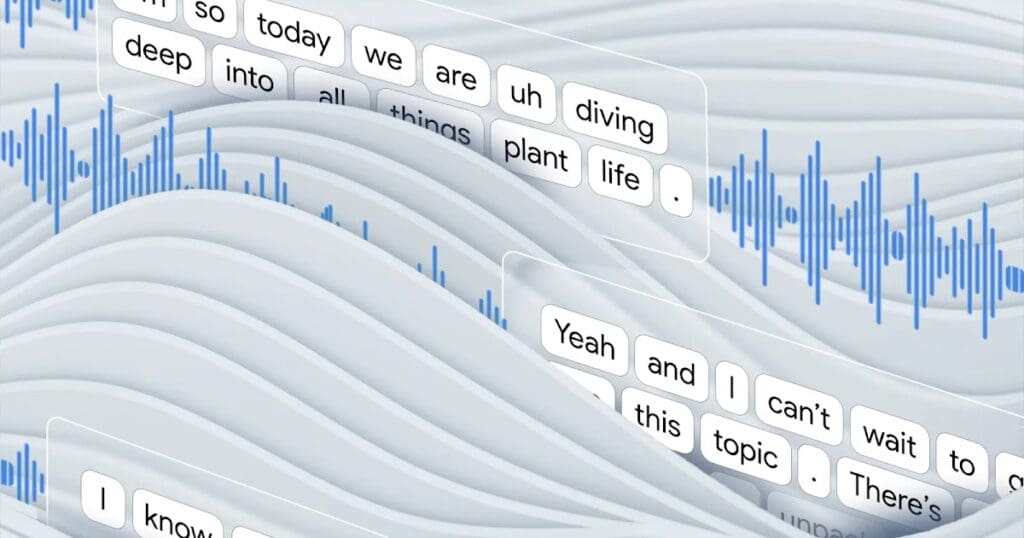

An animation showing how a speech production model autoregressively generates a stream of audio tokens that are decoded back into a waveform consisting of the dialogue between two speakers.

To teach the model how to generate realistic interactions between multiple speakers, we pre-trained the model using hundreds of thousands of hours of audio data. Then there’s much more, consisting of unscripted dialogue with numerous voice actors, with high sound quality and accurate speaker annotations, and the realistic inconsistencies of the “ummms” and “ahs” of real dialogue. We fine-tuned it based on a small interaction dataset. In this step, we taught the model how to reliably switch speakers during generated interactions and output only studio-quality audio with realistic poses, tones, and timing.

In line with our AI principles and our commitment to developing and deploying AI technology responsibly, we have incorporated SynthID technology to watermark non-temporal AI-generated audio content from these models. , to prevent potential misuse of this technology.

A new speech experience awaits

We are currently focused on improving the model’s fluency and acoustic quality, adding more fine-grained control over features such as prosody, and exploring how best to combine these advances with other modalities such as video. I’m doing it.

The potential applications for advanced speech generation are vast, especially when combined with the Gemini family of models. From enhancing the learning experience to making content more universally accessible, we’re excited to continue pushing the boundaries of what’s possible with voice-based technology.

Acknowledgment

Authors of this work: Zalán Borsos, Matt Sharifi, Brian McWilliams, Yunpeng Li, Damien Vincent, Félix de Chaumont Quitry, Martin Sundermeyer, Eugene Kharitonov, Alex Tudor, Victor Ungureanu, Karolis Misiunas, Sertan Girgin, Jonas Rothfuss, Jake Walker, Marco Taliasacchi.

We would like to thank Leland Rechis, Ralph Leith, Paul Middleton, Poly Pata, Minh Truong, and RJ Skerry-Ryan for their important work on interaction data.

We are very grateful to our collaborators at Labs, Illuminate, Cloud, Speech, and YouTube for their great work in incorporating these models into their products.

We would also like to thank Françoise Beaufays, Krishna Bharat, Tom Hume, Simon Okumine, and James Zhao for their guidance on the project.