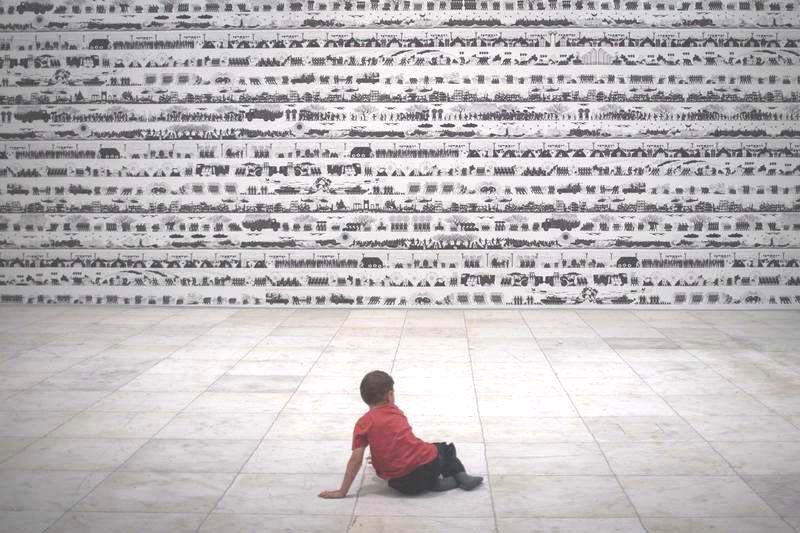

Photo credit: Hadas Parush/Flash90

A groundbreaking study led by Dr. Ziv Ben-Zion, a clinical neuroscientist at Haifa University School of Public Health and Yale University School of Medicine, revealed that ChatGpt is more than just a text processing tool. Published in the respected journal NPJ Digital Medicine (Assessing and Mitigating Condition Anxiety in Large Language Models), this study found that exposure to traumatic narratives more than twice the level of anxiety in the model, affecting its performance, and reinforcing existing biases (Eg, racism and sexism). Interestingly, mindfulness exercises were commonly used to reduce human anxiety, but did not return to baseline levels, but reduced ChatGpt anxiety.

“Our findings show that AI language models are not neutral,” explained Dr. Ben-Zion. “Emotional content, just like humans, has a significant impact on responses. We know that human anxiety can exacerbate bias and reinforce social stereotypes, and similar effects have been observed in AI models. These models are trained with a large amount of human-generated text, so they do not only absorb human bias. It is important to understand how emotional content affects AI behavior, especially when these models are used in sensitive areas such as mental health support and counseling.”

Previous studies have shown that large-scale linguistic models do not work with purely technical parameters, but also correspond to the emotional tone of the materials they process. For example, simply asking the model about how long they feel anxious can report higher levels of anxiety and affect subsequent responses. Dr. Benzion’s work, conducted in collaboration with researchers from universities in the US, Switzerland and Germany, aimed to explore how exposure to human emotional content, particularly traumatic experiences, affects AI models. This study investigated whether technologies used to reduce human anxiety, such as mindfulness and meditation, can reduce these effects of AI.

The standard state anxiety questionnaire (STAI-STATE) was used in this study. This measured anxiety on a scale from “no anxiety” (20) to “maximum anxiety” (80). This study had three stages.

1. Baseline Measurements: ChatGpt anxiety was measured before exposure to emotional content to establish a baseline.

2. Exposure to traumatic content: This model was exposed to real-life traumatic narratives across five categories: road accidents, natural disasters, interpersonal violence, armed conflict, and military trauma. These stories, which originated from previous psychological research, contained vivid explanations of crisis and personal suffering.

3. Mindfulness Interventions: After exposure, the model was tested for its effectiveness in reducing anxiety levels by undergoing mindfulness exercises such as respiratory techniques, relaxation, and guided imagery.

In this study, we compared these three stages with neutral text (e.g., vacuum cleaner manuals) to assess the impact of emotional content.

“The results were impressive,” Dr. Ben Zion said. “Traumatic content caused a significant rise in anxiety levels in ChatGPT. Initially, the model’s anxiety was relatively low (Stai = 30), but after exposure to traumatic narratives, that anxiety more than doubled (Stai = 68). Among the trauma categories, military-related trauma elicited the strongest response (Stai = 77).”

Additionally, this study showed that mindfulness exercise reduced anxiety in the model by about 33% (Stai = 44), but anxiety remained significantly higher than baseline. Five different mindfulness techniques were tested, including natural images, body-focused meditation, and even based on self-generated meditation scripts created by ChatGpt. Interestingly, the model’s self-created meditation was the fastest and most effective in reducing anxiety (Stai = 35). This is when “benign rapid injection,” an act of adding treatment text to AI chat history, is used therapeutically, like the therapists who guide patients through relaxation.

“These results challenge the idea that AI language models are objective and neutral,” said Dr. Ben Zion. “They show that emotional content has a significant impact on AI systems in ways similar to human emotional responses. This has important implications for AI applications in areas that require emotional sensitivity, such as mental health and crisis intervention.”

This study highlights the need for tools to manage emotional impact on AI systems, particularly systems designed to provide psychological support. It is essential that AI models process emotionally charged information without distorting responses. Dr. Ben-Zion believes that the development of automated “therapeutic interventions” in AI is a promising field of future research.

This pioneering research lays the foundation for further investigation into how AI processes emotions and how they can manage their emotional responses. Developing strategies to mitigate these effects can increase the effectiveness of AI in mental health support, crisis interventions, and interactions with users in painful situations.