Two Senate Democrats are pushing a new bill to more rigorously and systematically review artificial intelligence systems before the federal government uses them.

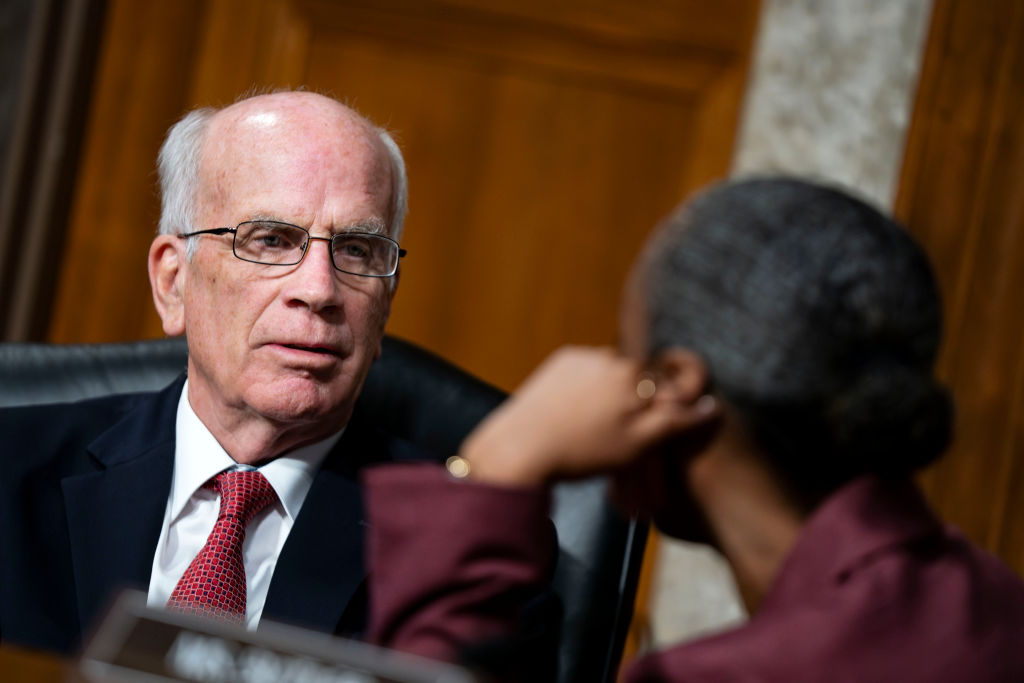

The Trustworthy Artificial Intelligence by Design Act, by Sens. Peter Welch (Vermont) and Ben Ray Lujan (D), authorizes the National Institute of Standards and Technology to create a “Trusted by Design Framework” for AI. In some ways, this move mirrors the Cybersecurity and Infrastructure Security Agency’s Secure by Design efforts.

Under the TBD AI Act, NIST would also develop definitions of “core reliability metrics” that federal agencies would follow and select AI system components to be evaluated throughout each stage of the development process. You can

“With great power comes great responsibility, and we must ensure that AI is used safely,” Welch said in a statement. “This includes taking action to ensure the government remains a leader in the ethical implementation of AI and setting clear guidelines to provide reliable and unbiased services to the American people. This bill establishes best practices and manages AI risks.”

Luján said the bill supports AI innovation, including what he observed at the New Mexico AI Consortium. “These innovations have great potential if we can establish clear guidelines to keep AI safe, resilient, transparent, and fair,” he said, adding that the bill would “build consumer trust.” “The development of AI guidelines that will build the foundations of society and improve the foundations of society should be accelerated.” The path to innovation. ”

The TBD AI Act establishes how federal agencies can use the framework created by NIST to ensure that the AI systems and tools they use are deployed in a trustworthy manner. The bill requires heads of government agencies to publicly report on the compliance measures and evaluation status of “all targeted AI systems,” and submit an additional report to Congress within three years of the enactment of the AI implementation law. I am asking you to submit it. Such systems already in use by government agencies will be evaluated through the new framework and will be required to meet guidelines within two years or be retired by the agency.

The NIST Director has the discretion to draw from other guidelines and best practices in developing this new framework. The law envisions the framework as a living document and requires the director of NIST to “regularly update” the framework at least once a year.

Authenticity is the North Star of the Bill, and in the text of the Bill, this concept is identified as effectiveness and reliability, safety, security, resilience, transparency and accountability, explainability and interpretability, privacy, fairness and It is defined as an AI system built with a lack of bias, and an “other.” (NIST) Safety, security, or reliability matters as the Director deems appropriate. ”

The bill is supported by several technology policy nonprofits and advocacy groups, including Public Citizen, Public Knowledge, Encode, Accountable Tech, and the Transparency Coalition.

“It is common sense for governments to ensure that the AI tools they use are reliable, unbiased, fair and easy to understand. We cannot rely on them to provide reliable tools,” Public Citizen co-president Robert Wiseman said in a statement. “The Trustworthy by Design AI Act will turn common sense into policy, strengthen government operations, and set standards for private markets.”