As the Carney government pushes artificial intelligence as part of Canada’s economic growth, some inventors using the technology and experts studying its impact are calling on Ottawa to install more guardrails, something the federal government is actively considering.

“To be honest, the guardrails we have now are self-imposed by big tech companies, and what we’re seeing is that they’re not enough,” said Valerie Pisano, CEO of Mila, an AI institute in Montreal.

In an interview with CBC’s The House that aired Saturday morning, Pisano said his goal is to come up with some protection in “a world that allows companies to do whatever they want without hindering innovation.”

“We know how to do this,” Pisano said. “We’ve done this in every impactful, innovative and transformative industry. We’ve done it in aviation, we’ve done it in pharmaceuticals, we’ve done it in nuclear power.”

Pisano said he is closely monitoring a range of risks posed by rapidly evolving AI, including how children interact with technology, the impact AI data centers will have on the environment, and the potential for them to displace young workers.

“I’ve been noticing an increasing number of humans having intimate relationships with AI bots,” Pisano said. “What bothers me is that this is my first time…It’s completely new.”

In this case, Pisano said AI could have a positive impact as socially challenged youth could use interactions with an AI chatbot to strengthen their social skills. But they will need other support around them.

“You could go completely to the other end of the spectrum, but what you see there is frightening. You’re completely cut off from human contact,” Pisano said.

AI Minister Evan Solomon told the House of Commons that the federal government was looking closely at these concerns, among other things, to see how best to address them.

Researchers are increasingly turning to AI to address loneliness and social isolation. But exploring this technology also raises questions about the social impact it may have on people.

“I’m very aware that there are absolutely dangerous aspects around children who use this,” Solomon told host Katherine Cullen. “But all we need is to understand how to use this tool properly.”

“I don’t want to say, ‘It’s all hype, it’s all confetti, this is going to be great,’ or ‘It’s destiny.’ We have to be realistic,” Solomon said. “We have a lot of control here, so we have to plan and get this right.”

Those plans will come from various departments of the federal government, Solomon said.

He explained that his office is responsible for data privacy and plans to introduce legislation on this issue by the end of the year.

Mr Solomon added that the Minister for Heritage, Stephen Guilbeault, would be tackling online harm, while the Minister for Justice, Sean Fraser, would focus on reforming the criminal law.

The Liberal government has also set a new AI Strategy Taskforce to consult with networks by the end of this month, with ideas to be tabled in November. Solomon said he expects a revamped national AI strategy to be completed by the end of this year.

The group has been asked to consider various aspects of AI, including research, implementation, commercialization, investment, infrastructure, skills, and safety and security. The government is also holding a public consultation on its AI strategy.

Critics of the task force told The Canadian Press this week that it is too biased toward industrial and technology perspectives, noting that only three of its more than 20 members are asked to address secure AI systems and public trust.

Inventor Echo seeks safety measures

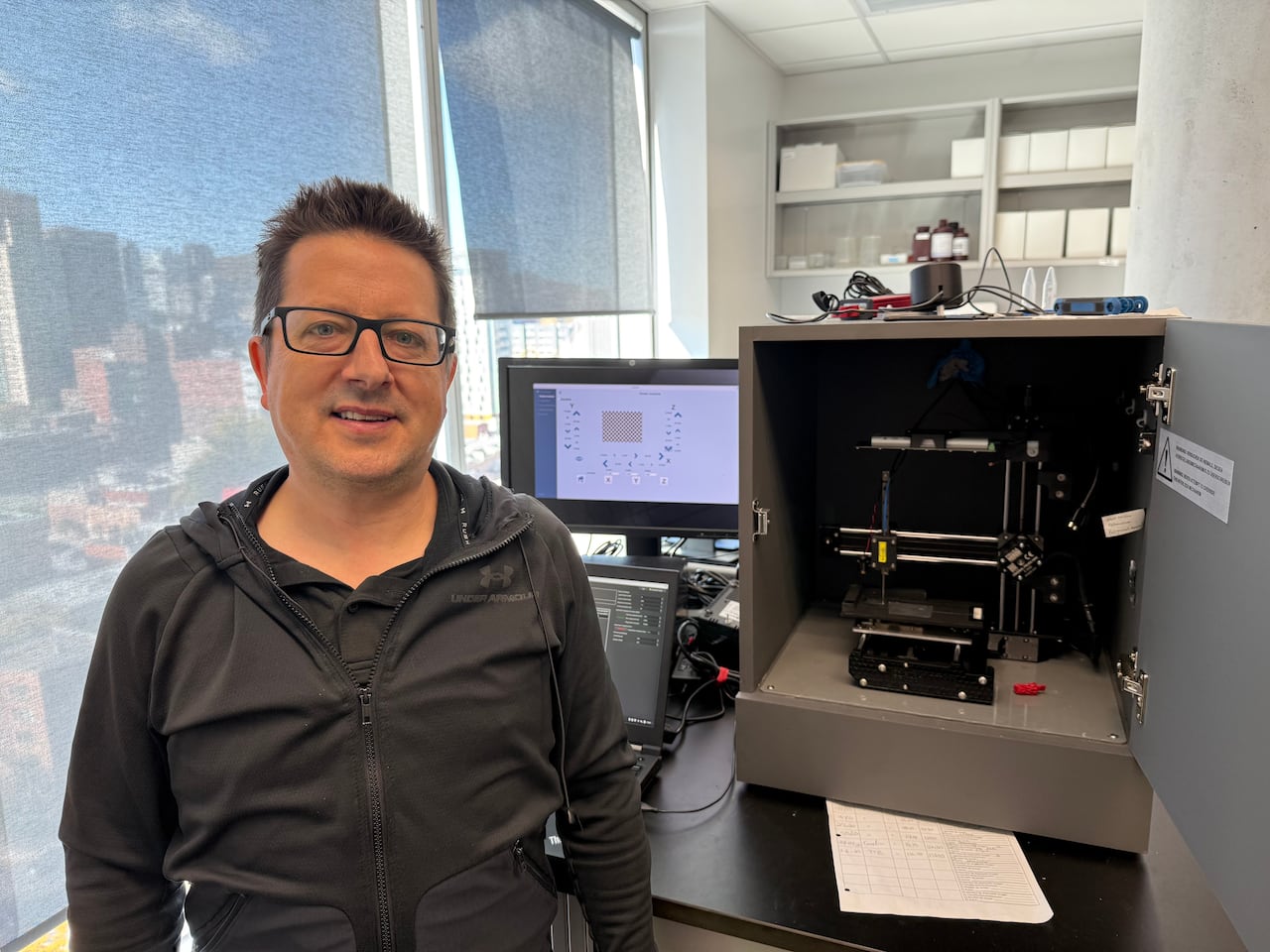

Frédéric Lebron of the University of Montreal Hospital Center said the device he co-invented – a handheld tool called Sentry that combines lasers and AI to detect whether tissue is cancerous or healthy in real time – is used in up to five surgeries a week.

“It could be used to make sure that no tumor remains after surgery,” Lebron said. “For example, a second surgery can be avoided and in some cases, surgery may even be able to cure (the patient).”

LeBlond said Sentry sends back complex signals about the tissues it analyzes and AI is used to ensure it doesn’t make mistakes. He said AI “should and will play a role” in supporting decision-making, but it cannot control what decisions are ultimately made.

“We need guardrails,” LeBron said. “You need to be an expert to fix it.”

Lebron said AI in Canadian health care has the potential to be transformative, but guidelines governing the technology need to evolve to prevent problems such as AI bias (for example, an algorithm trained only on data from young men).

“There are many examples like that and I think this needs to be incorporated into the regulations,” he said.

AI and environmental risks

Data centers come in because Canada needs more computing power to expand its AI industry.

Late last year, the federal government launched the Canadian Sovereign AI Computing Strategy, announcing it would invest up to $700 million to “leverage investments and develop Canada’s AI champions” in new or expanded data centres.

Hamish van der Ven, an assistant professor at the University of British Columbia who specializes in sustainable management of natural resources, said data centers require enormous amounts of power, making him question how valuable they are.

“It’s good that some data centers rely on clean energy, but that doesn’t mean data centers that run on dirtier, carbon-intensive energy can’t open up and compete with data centers that run on clean energy sources,” he said.

The International Energy Agency predicted earlier this year that electricity demand from data centers around the world will more than double to about 945 terawatt hours by 2030, slightly more than Japan’s entire electricity consumption.

If Canada decides that data centers will play a role in its future economy, “data centers will need to adhere to the precautionary principle and be embedded within a robust regulatory framework that requires net zero emissions and no new demands on the energy grid,” van der Ven said.

Angela Adam, senior vice president of eStruxture, Canada’s largest data center provider, told the House of Commons that data centers are “truly the foundational layer” for innovators to build AI products.

He also said data centers located in Canada can safely store Canadians’ data because they are bound by Canadian law. This angle is crucial to Carney’s plan to build a “sovereign cloud” that gives users more control over where their data goes.

Generative AI requires enormous amounts of energy to power it. CBC’s Rebecca Zandbergen headed to a Nepean-based data center where servers are running furiously to power the technology. She spoke to ThinkOn’s founder to find out what it takes to cool all those servers, responsible AI, and the power grid.

“When you own your data, you own your destiny,” Adam said. “Data centers and data are a national security issue at this point, and for good reason.”

When asked about environmental concerns, Adam said her company cares about providing clean power and that eStruxture has “always managed sustainability, but we need more power, that’s a fact.”

“The need for electricity is not going to decrease,” Adam said. “How we work together with governments, power producers and regulators is another story. We need to come together and find ways to power these workloads in the most sustainable way possible.”

Asked if Canada would place limits on the energy used in data centres, Solomon said it was primarily the responsibility of the provinces. But he noted that if data centers are connected to Canada’s power grid, they are obligated to follow clean power regulations.

Solomon also said Canada is considering proposals for what a sovereign cloud or sovereign data center might look like. “Obviously we want to comply with clean energy regulations. Those are the factors we’re considering.”